AR has a major usability problem – how to interact with the program – after all you can’t (easily) carry around a keyboard. MIT’s Sixth Sense uses a video camera to discern how you are interacting with a projected interface. At the CHI conference this week there’s a presentation on a novel way of using capacitance of skin (among other effects) or figure out what the user is doing.

Touché: Enhancing Touch Interaction on Humans, Screens, Liquids, and Everyday Objects

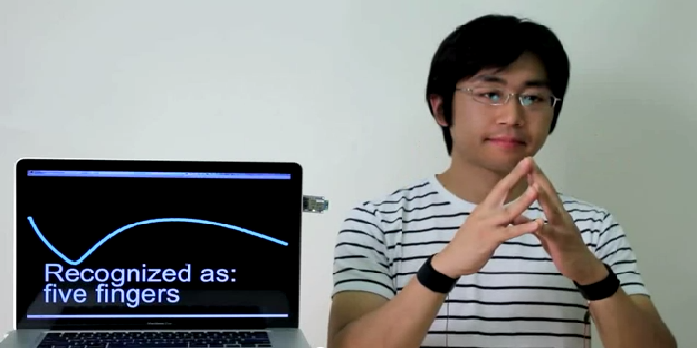

Using a very novel Swept Frequency Capacitive Sensing technique they are able to figure out generally what gross action or gesture the user is doing, including discerning if they are touching, one, two, three, etc. fingers together.

It’s probably totally wrong if it’s raining or your sweaty, but not having to interact with a projected interface but just “touch type” as it were is definitely pretty cool. Nice introductory video here.