This is a long-winded answer to a Quora question, reproduced here.

TLDR: They will blend together into an always-on digital overlay that you will live in 24/7. And you’ll love it.

It’s actually much closer than you think. Very soon we will have headsets that are lightweight, long lasting, comfortable and fashionable. You already have a high-powered computing device with always-on connectivity in your pocket right now – your cell phone. In the short-term we will have headsets that are either tethered or wirelessly connected to your phone and they will serve as your VR/MR display. There’s currently research on contact lenses that have display technology built in so it’s likely that headsets will eventually disappear all-together.

It’s not going to be for VR that much, since VR is a special-use case, but for MR it will be something that you’ll get used to having constant access to. Just like most people don’t actually use their cell phones as phones but as more general-purpose computing and communication devices, MR will just blend into the background of your everyday life.

Let’s just take a look at one such “killer app” that will come into use – the “Mirror World” and what it enables.

(TLDR: Mirror World is (AFAIK) the “rest” of the world – the “inside” that Google Maps doesn’t cover plus a bunch of variously-sourced additional location-specific, real-time AR metadata)

Your phone’s GPS can place you within 3 meters, and as the Mirror World comes online, your location will be highly accurately derived, and your high-bandwidth always-on internet connectivity will allow you to interact with a plethora of Mirror World databases providing you a wealth of information about the world, including information on the people and places around you. This includes inside building and other locations of interest.

Governments, organizations and individuals will be able to place virtual anchors in real world locations with access to pop-up information about a particular location.

This can be anything from your doctor providing route information to their office (which will show up as a series of directional markers down the street (e.g. currently seen as Maps), through the building, into the elevator to the correct floor, then with waypoints right to their front door) to serving up nearby PokéStops and Pokémon.

All this information will be filtered by you selecting what AR layers you’re interested in knowing about, which will show up as information geotaged to the real world in your MR headset.

We already have the various “Maps” apps to get you to a location on the outside, the Mirror World will cover the last bit of information “inside”. For example, the Waze app’s road database is constantly updated and refined by actual user’s info as they drive a route – locations and crowd-sourcing will soon map the rest of the world in constantly-updating detail which will be available for you to use. Niantic already has a global database they use for Pokémon Go. There are more than a few startups (like 6d.ai) that are providing the tools to map the real-world – this tech race has already started.

I’m going to gloss over all of the other use cases that are compelling, from the ability to instantly know about email, IM’s from friends, video conferencing, having a AI personal assistant, etc. etc. MR will be your interface to the digital world and as the real world gets digitized it will all start blending together.

So it’s not about spending time “in” VR or MR or not, it’s going to be that it’s just always there, and you’ll have the ability to add more or less as you like, so it will always be there, just to differing degrees.

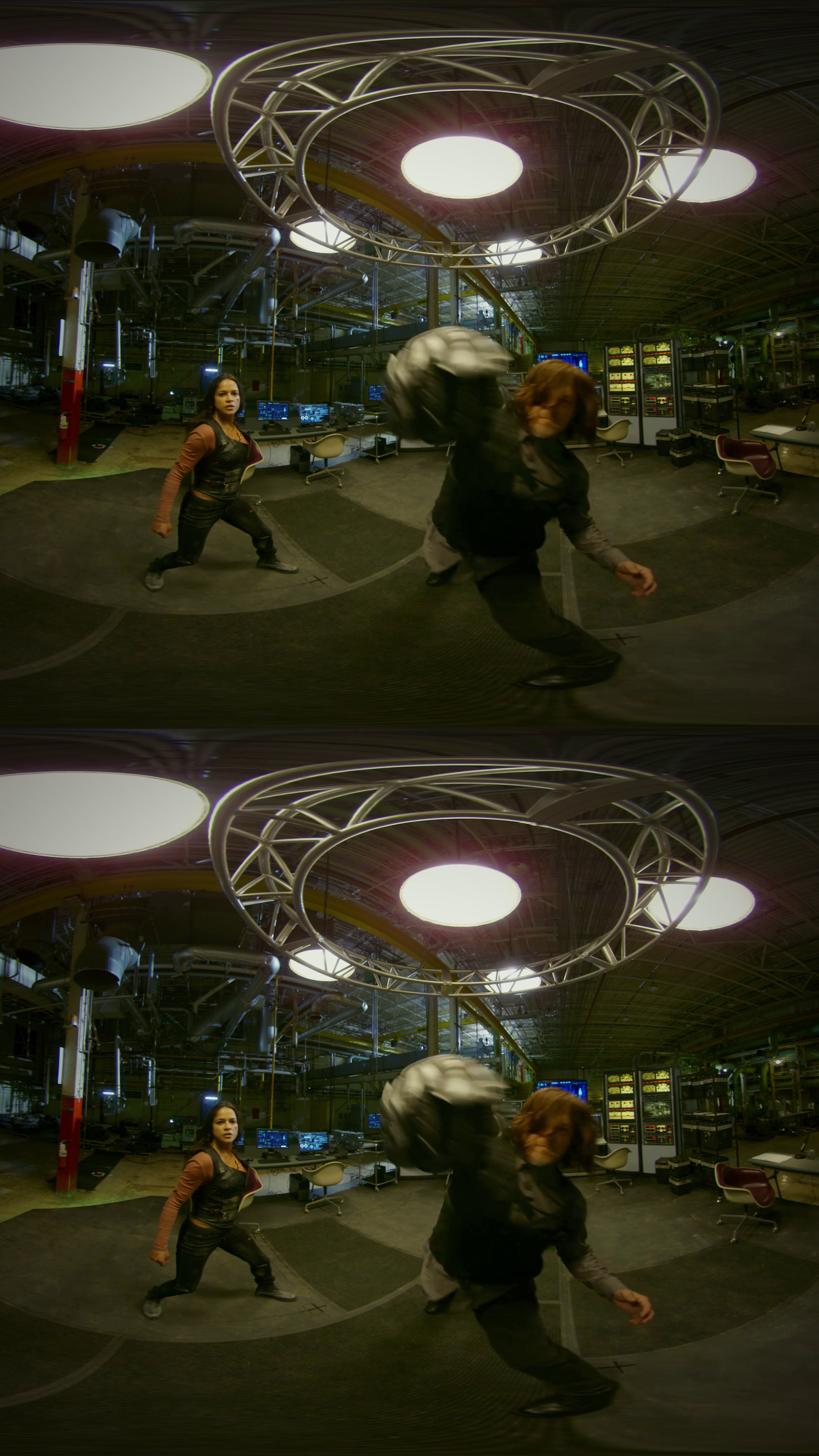

A still from the video

A still from the video When I reviewed Google’s 360° action flick

When I reviewed Google’s 360° action flick