Inside ‘The Field Trip to Mars,’ the Single Most Awarded Campaign at Cannes 2016 McCann and Framestore dissect their Lockheed Martin marvel – so reads the Adweek headline discussing the Lockheed Martin Mars Bus, and its huge success at the Cannes Film Festival.

It is one of the most demanding, largescale, mobile, group VR experiences ever created. Framestore, working with McCann NY successfully launched Lockheed Martin’s Project Beyond: Mars Bus for the 2016 U.S.A. Science and Engineering Festival at the Washington DC Science Convention on April 15th, where we ran through about 2400 thrilled bus riders over the two days of the convention plus taking school kids out for the actual “live” VR experience the day before.

It is one of the most demanding, largescale, mobile, group VR experiences ever created. Framestore, working with McCann NY successfully launched Lockheed Martin’s Project Beyond: Mars Bus for the 2016 U.S.A. Science and Engineering Festival at the Washington DC Science Convention on April 15th, where we ran through about 2400 thrilled bus riders over the two days of the convention plus taking school kids out for the actual “live” VR experience the day before.

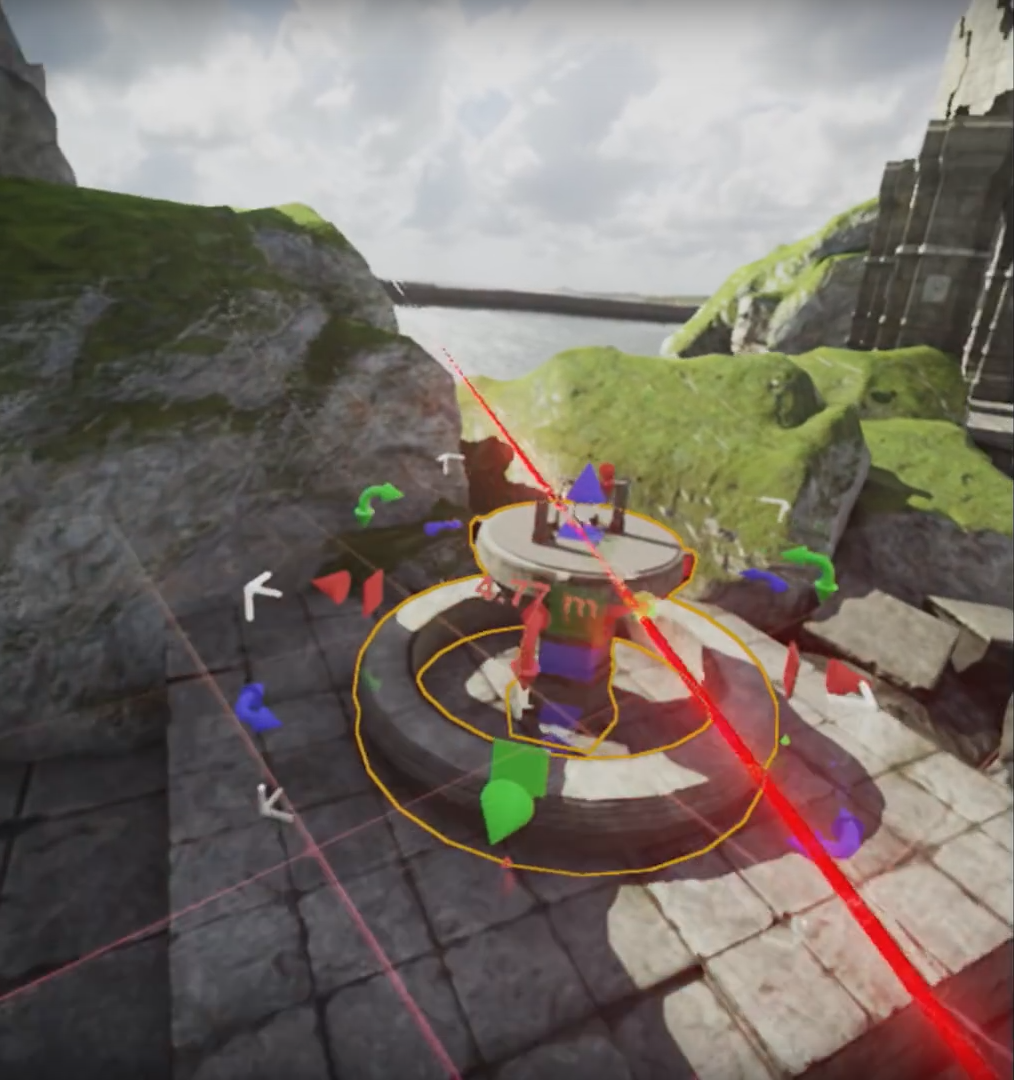

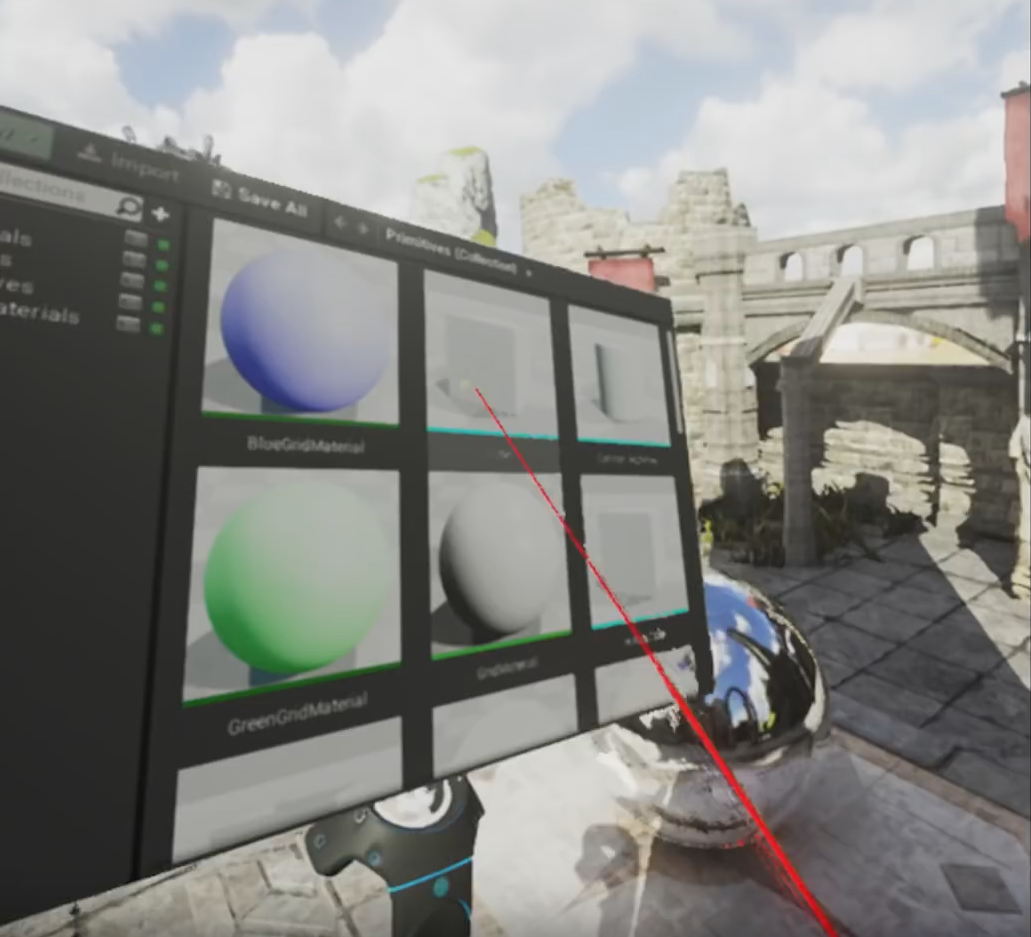

What other project involves a school bus, equipped with opaque/transparent windows, transparent/opaque 4K monitors, 6 rack-mounted gaming PC’s, its own private network, A/C unit and diesel generator, a suite of real-time GPS, inertial sensors, compass, and velocity laser readers? The software consisted of a custom-built data fusion application to spit out real-time velocity, heading, acceleration, and positional data to a suite of PC’s running the Unreal Engine Mars Bus simulation through various “windows” onto Mars. All packing into a moving school bus. A combination that’s never been tried before – and we pulled it off.

I came up with the overall architecture of the software applications. The software team was then able to test out Proof-of-concepts that let us push the limits of what had been attempted before. We quickly hit upon using Unreal running a driving simulation and needed to figure out how to make Mars drivable – both in a software simulation and in a real-world sense – the actions that the bus went through in the real world had to be real-time simulated in the Mars sim – marrying reality with simulation.

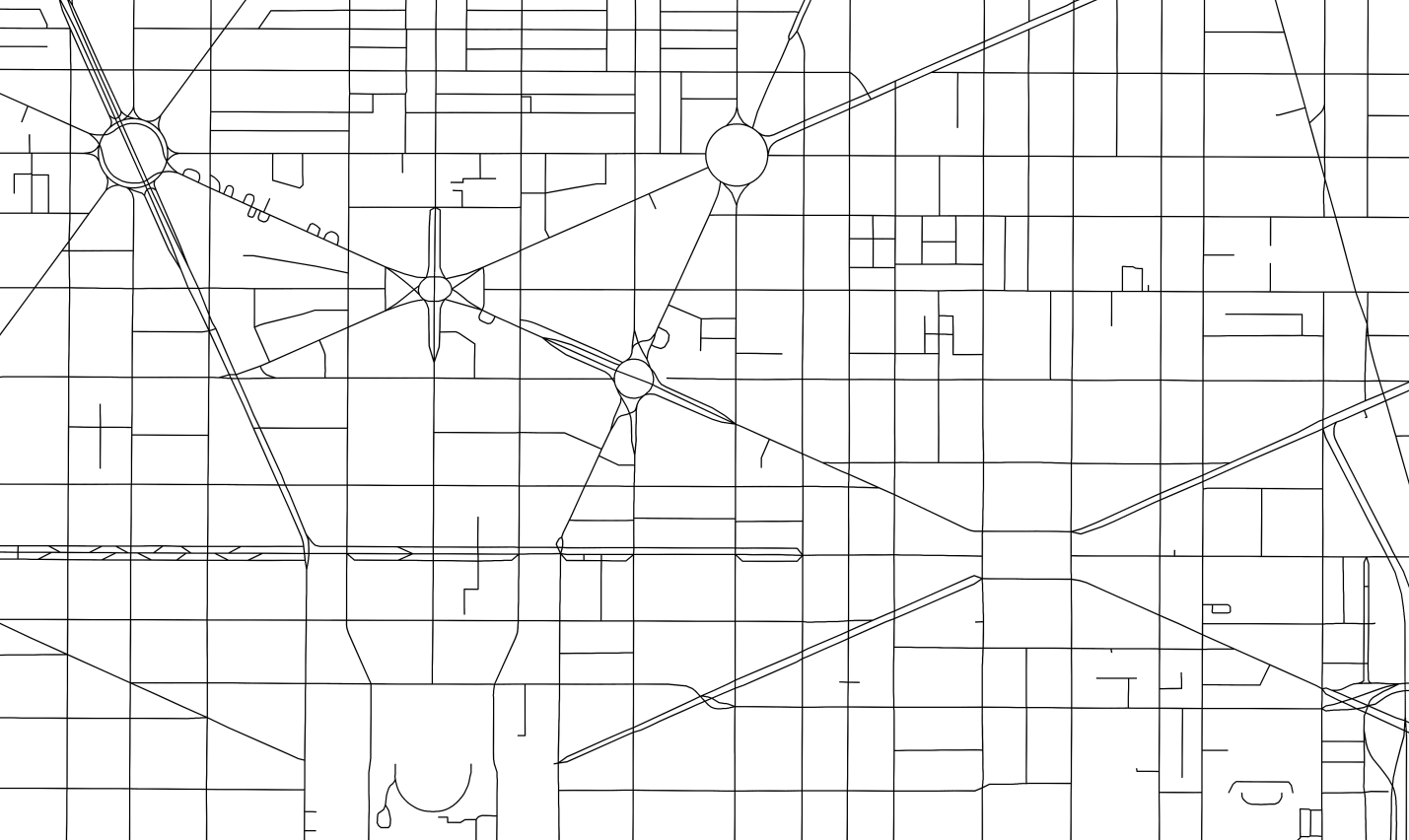

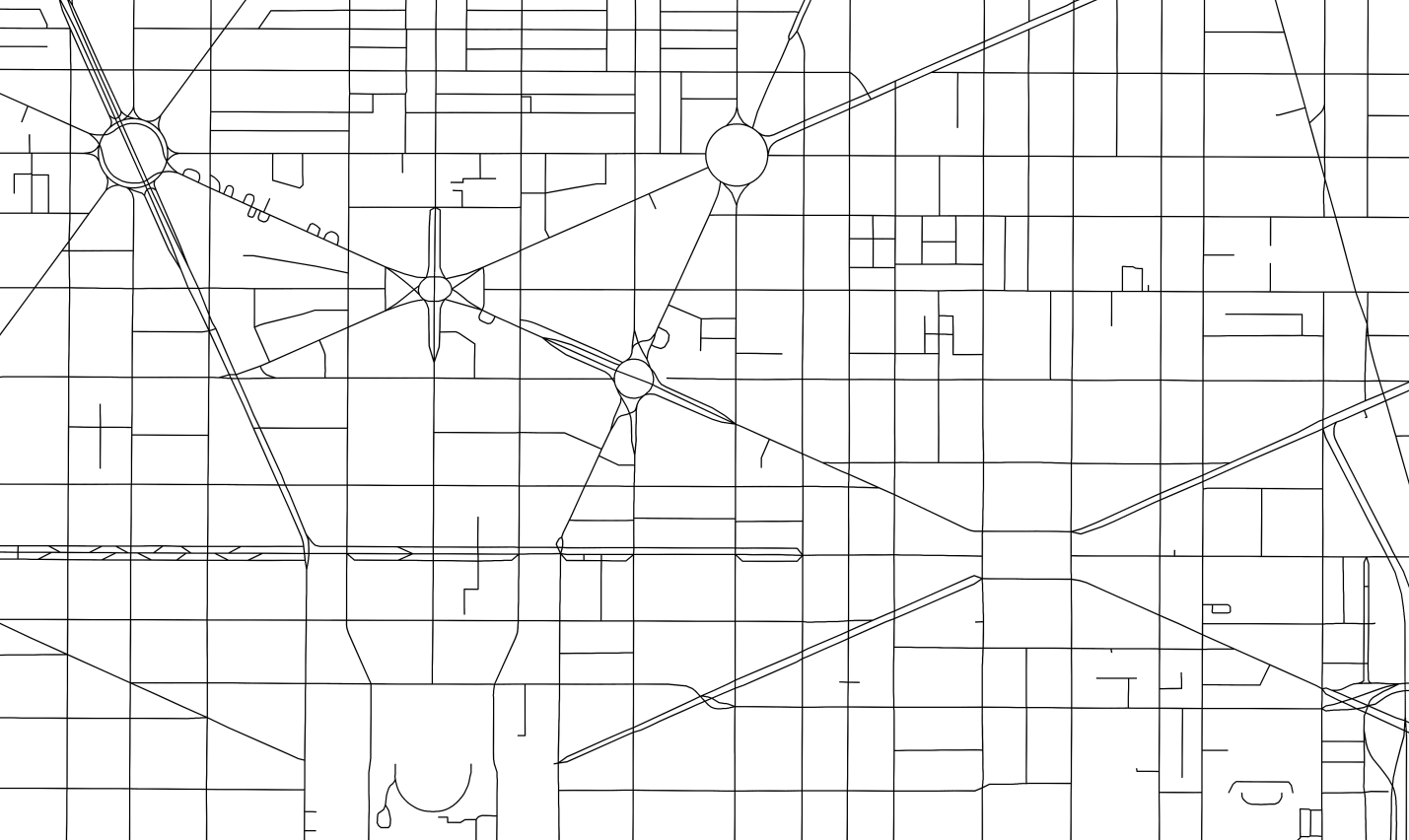

A real map of DC streets was used generate the “drivable” area of “Mars” – making it possible to drive through the DC metropolitan area (215 sq km) while avoiding the “randomly” placed rock and mountains of Mars. Thus the 24 kids got to drive around DC while seeing Mars go by on the windows. If the bus stopped, turned, or went over a bump, so did the kids – making it the largest, mobile, real-time group VR experience I believe has ever been created. There were some highlights that we wanted the kids to experience. We had a drive by of the Mars Rover, through a futuristic Mars Base Camp, and through a Martian sandstorm – taking advantage of our 500 watts of 5.1 sound system – which was plenty loud in an enclosed bus – the impact sounds provided a visceral feel that things were actually impacting the bus. (See the experience video).

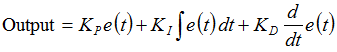

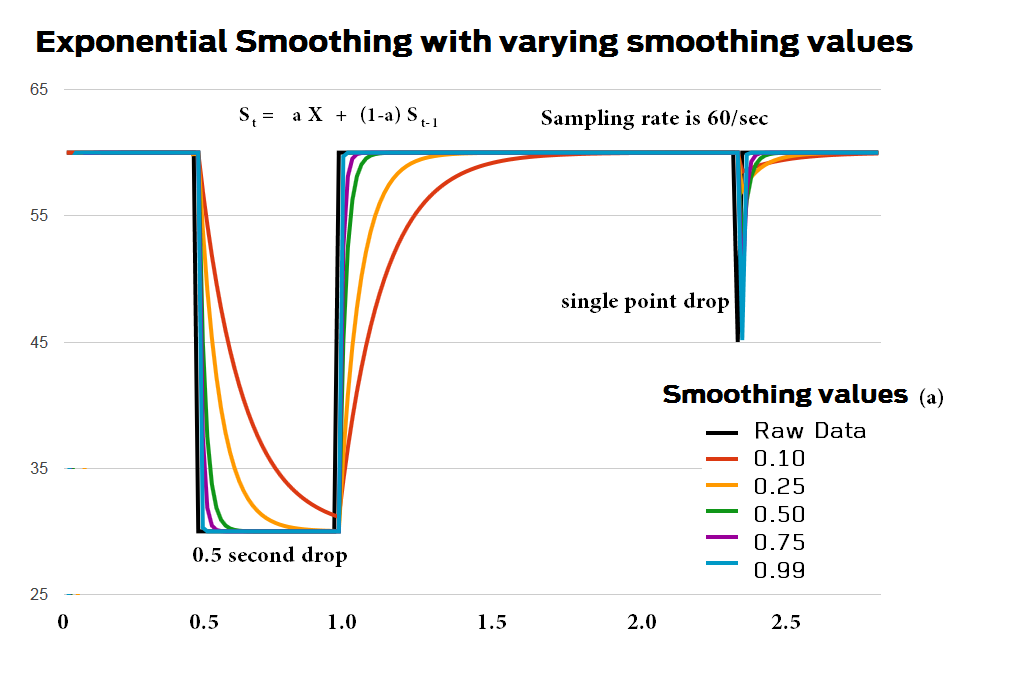

There was a server rack of gaming PC’s in the back of the bus – four that were dedicated to driving the 80 inch 4K monitors; there was one PC doing the data acquisition and data fusion, which then squirted position, velocity, direction info out onto the network via UDP to be picked up by the 5 simulation PCs – the additional one showing the real time top down bus position on the road map – serving as a witness to the accuracy of the simulation. There was a Xbox controller attached so that the position/speed could be manually modified in case it was needed or the GPS system went south. We never needed to use it. Finally there was one PC for the “monkey” – the poor sod who had to sit in the back with the servers in a dark tiny room on a folding chair whose job it was to start and if necessary restart the experience. It was also used to provide some systems monitoring – a lot of which was added at the last minute because we had some trouble with the PC’s failing to respond initially – we finally added some software that would ping each PC every second to make sure it was responding – the simulation status, and PC status were all shown on the experience control display.

Once in the convention center we switched over to a canned ride, but once word got out about the experience, we had lines of well over an hour wait to ride the bus. Overall the ooo’s and ahhh’s we got, plus the frequent clapping at the end by the thrilled riders made the demanding, 3-month effort to bring it together a cherished accomplishment. Overall it was a pretty daring experience to bring off – given that we were attempting things never before attempted using technology in a way that had never been used together.

The final result was that there was a lot or recognition when the project was shown to the general public. The Field Trip to Mars was the single most awarded campaign at the 2016 Cannes Lions International Festival of Creativity. It got a total of 19 Lions across 11 categories.

- 1 Innovation Lion

- 5 Gold Lions

- 8 Silver Lions

- 5 Bronze Lions

It was also nominated for a Titanium Lion among 22 out of 43,101total entries across all categories from around the world.

ADWEEK also liked the bus, as it won top honor from ADWEEK’s Project Isaac – wining 1 Creative Invention, 1 Event/Experience Invention and the highest award, the Gravity.

In all it was one of the most challenging VR experiences I have ever had the pleasure to work on, and with the extraordinary team of engineers, artists and producers working furiously during the last week it came together and created one heck of a VR experience.

Ron’s Video links for the Mars Bus;

7 Days to go

less than 24 hours to go…

The experience from inside

It was all worth it!

Framestore

Framestore’s Mars Bus page (click on credits to see who worked on it)

External sites

Lockheed Martin’s new magic school bus wants to virtually take kids to Mars

Lockheed Mars Bus Experience

Alexander Rea’s page

Anima Patil-Sabale‘s video as a passenger