tl,dr: Some companies (including Google) have announced soon-to-be available (2018) very-high resolution VR/AR displays. To deliver on these, two problems need to be overcome – not enough memory bandwidth or GPU compute available)(to be solved with foveated rendering techniques) which then adds on the problem of gaze tracking to control the foveated rendering. Turns out that both of these are understood, solvable problems. Coming soon to many HMDs.

In a previous post from two years ago I estimated that a VR “Retina” display would require a resolution of about 7410×6840/eye. Now this was with FOV specs of the first consumer crop of HMD’s. I missed the first subtle reveal (March 2017) that Google is working with a partner on a 20 Mpixel/eye OLED display. While this is excellent news – how does it measure up to the guestimates of my 2 year old post? And more importantly, what does it portend for the future.

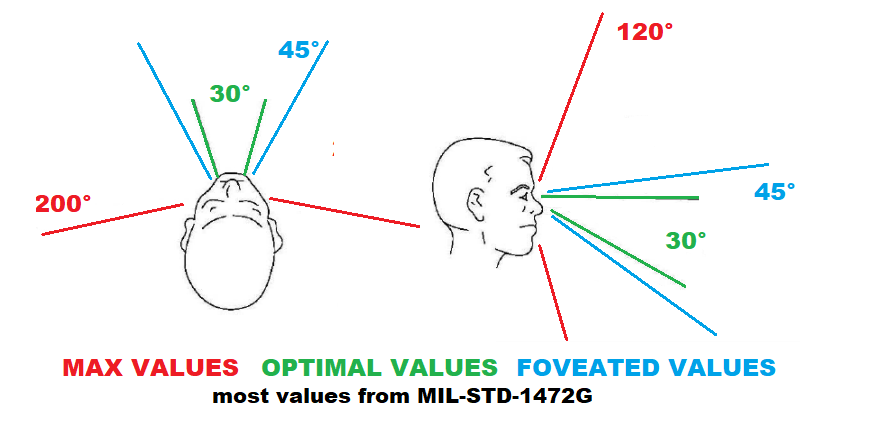

While (VP of VR at Google) Clay Bavor’s SID Display Week Keynote (March 2017) video was a bit light on specifics, we can guess at some of the values. My original calculations were for 130°/eye horizontal FOV. – we’ll keep my 120° for the vertical FOV. The new display is 200° horiz (up from the original 190 total FOV)– which gives us 140° per eye FOV. For a Retina display (@ 57 pixels/degree) this means we’d need a resolution of 7,980 x 6,840. The accepted resolution value for the human fovea is 60 pixels/degree, but we’ll use my original 57 for now.

So taking our 140° horiz. FOV and 120° vert/ FOV values into 20 MPixels gives us a guess of;

34.5 PPD for the new display (PPD = Pixels per Degree)

Or a display of about (rounding to more display-normal values) 4960X4096 – about a 7:6 aspect ratio.

4960×4096 = 20.3Mpixels.

Now, onto practical matters. Pushing 40Mpixels (times 2 for each eye) per frame is a sh*t-ton of data, especially at the frame speeds (90-120Hz) we need for good VR. (at a minimum 40GB/s). Now current HDMI2.1 and DisplayPort bandwidths can come close, but, as it noted in the video and by MIL-STG-1472, there’s “central vision” and “peripheral vision”.

Central Vision is the high-def, color, feature resolving area – called “gist recognition”, while peripheral vision is less color perceptive and is much more sensitive to motion detection – i.e. temporal changes in “pixel” intensity. It also turns out the the information your brain interpolates from each of these fields is different, so this can be used to fine-tune the perceived scene – so it’s not all just blurrier pixels. It’s nicely illustrated by this video;

Central Vision is the high-def, color, feature resolving area – called “gist recognition”, while peripheral vision is less color perceptive and is much more sensitive to motion detection – i.e. temporal changes in “pixel” intensity. It also turns out the the information your brain interpolates from each of these fields is different, so this can be used to fine-tune the perceived scene – so it’s not all just blurrier pixels. It’s nicely illustrated by this video;

Look at one ball, the other seems to follow the contours. From Illusion of the Year, 2012 Finalists

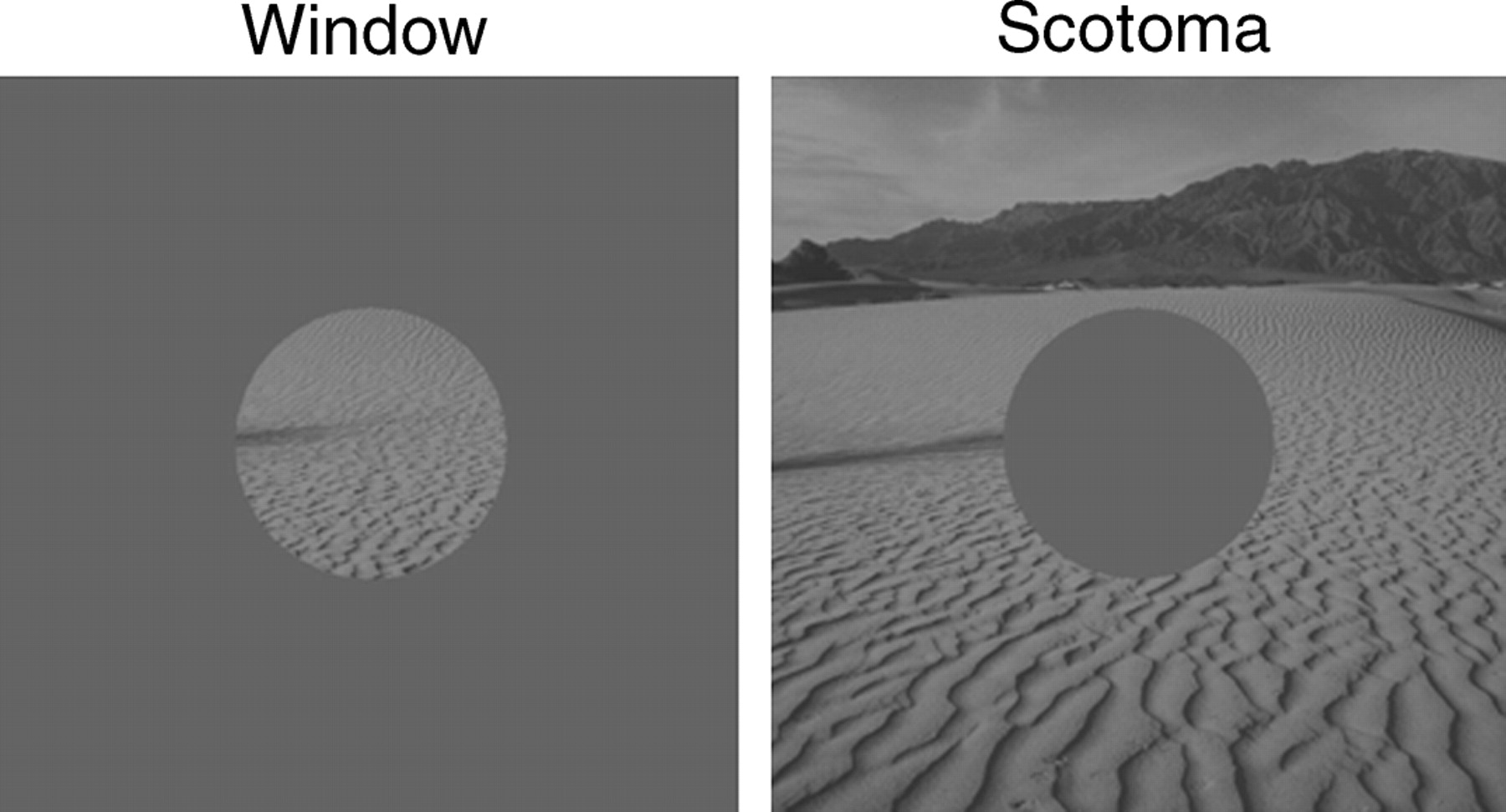

This is also called “Window” (for central) and “Scotoma”

from: Journal of Vision, Sept. 2009. The contributions of central versus peripheral vision to scene gist recognition. by Adam M. Larson, Lester C. Loschky

from: Journal of Vision, Sept. 2009. The contributions of central versus peripheral vision to scene gist recognition. by Adam M. Larson, Lester C. Loschky

In general, the peripheral vision cone loses resolution of color, details and shapes, which brings us to “foveated rendering” – rendering just the Window in high resolution, and the Scotoma in lower resolutions, typically with some sort of blending operation used in between.

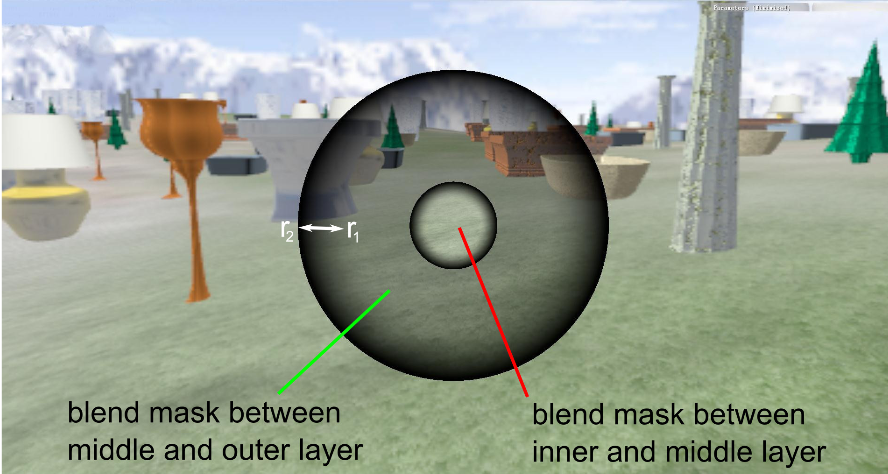

from the Microsoft Research Paper

from the Microsoft Research Paper

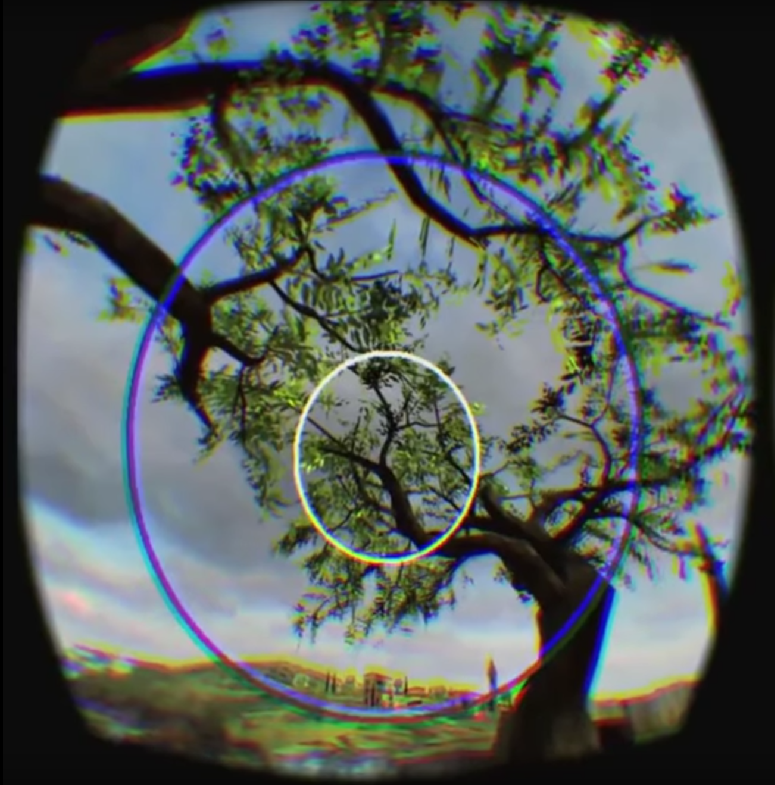

And in practice it looks something like this;

It turns out that this has been been an area of research for a while, and with the advent of the resolution needs of VR and AR, has seen quite an uptick in research papers.

It turns out that this has been been an area of research for a while, and with the advent of the resolution needs of VR and AR, has seen quite an uptick in research papers.

In general, most techniques generate 3 rendering regions, the central (high resolution) region, the outer (lower res) region, and an intermediate, interpolated region. A surprising result is that some latency in tracking was acceptable, and in particular the technique of rendering the foveated area has significant effect upon the perceived smoothness of the experience.

Techniques that were contrast-preserving and temporally stable gave the best results. The recent Nvidia and Google papers highlight a few different techniques. The Google paper also introduces some post-processing techniques to better accommodate the lens-distortion of HMDs when using foveated rendering. Turns out that LERPing between isn’t at all the best method.

If you’re interested in reading more in depth;

Understanding Pixel Density and Eye-Limiting Resolution

Understanding Foveated Rendering

A multi-resolution saliency framework to drive foveation

Latency Requirements for Foveated Rendering in Virtual Reality

Perceptually-Based Foveated Virtual Reality

Introducing a New Foveation Pipeline for Virtual/Mixed Reality

This all sounds great, but there’s one other thing we need to do get this to actually work – eye or gaze tracking. After all, you can move your eyes (and your gaze) without moving your head. In order to get foveated rendering to work you need to know (and in time to react to it) just where on the display the user is gazing at. (The Nvida paper states that they found up to a 50-70ms lag in updating the Window in foveated rendering acceptable.) Since this can be just about anywhere in the FOV, it requires being able to scan the eyes to detect gaze direction. This a is also a very active field of research as well,

Unlocking the potential of eye tracking technology

with significant progress. (See products by Tobii, SMI, and Fraunhofer’s bi-directional photodirectional OLED display). It’s pretty much a solved problem, it just needs to be put into HMDs.

The basic technique is the shine infrared light on your eyes (inside the HMD) and look at the reflections. It’s some simple math to extract the retina’s location, and deduce the gaze direction from the highlights from the light reflected on the eye. This can all be done with some simple vision software. The output is then mapped to some pixel location on the HMD display.

Did you know that eye tracking is already in Windows ? Microsoft’s Eye Control came with the Windows 10 Fall Creators Update. Only works with an external tracker from Tobii, but it’s there.

So, there you have it – you can soon expect to see foveated displays – which introduced the requirement for gaze tracking – but that gaze tracking is also desirable for VR/AR. So we’ll soon have very nice HMDs that give us about half-retina resolution with gaze tracking thrown in for free. VR is about to get a ton better.